- Threads faces scrutiny for data privacy, urging users to be aware of how their data is used and safeguarded.

- Meta’s Threads app raises alarms with its data collection, reigniting debates on digital consent.

Do you remember when everyone raved about Threads being the next “Twitter killer?” Yet, shortly after its download, the enthusiasm faded not just because it functioned like Twitter (now known as X) with fewer features, but also due to emerging data privacy concerns. It’s fascinating how swiftly the tides of tech trends can shift, especially when data privacy comes into the spotlight.

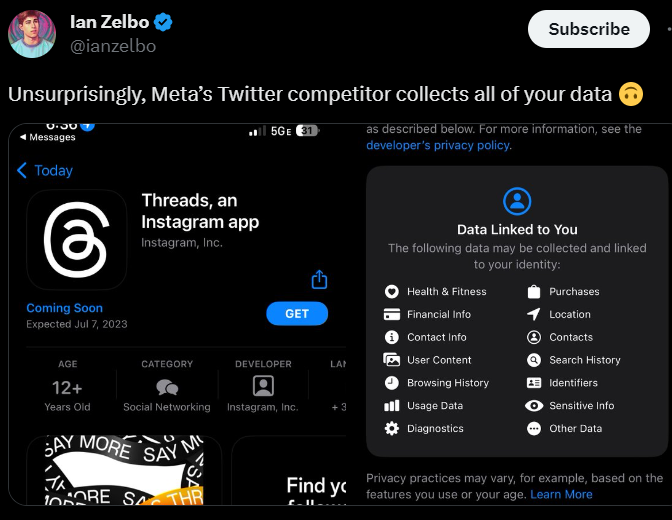

A major stir occurred when X users shared screenshots of Threads’ privacy policy from Apple’s App Store. Threads indicated that it might gather various personal details from its users, ranging from health and financial data to browsing history and location, which could be passed on to advertisers.

Data privacy issue: what do they know about you?

This suggests that an advertising behemoth could share, for instance, health-related user data with advertisers, enabling them to pitch products like diet beverages or fitness gear.

Moreover, both Threads and Instagram, according to the Apple App Store’s disclosure, could access sensitive details including race and religion.

Mark Weinstein, an expert in social media and online privacy, commented, “If people are looking for an alternative to Twitter that respects their data privacy and right to civil discourse, they will have to keep searching.”

X user @Ianzelbo commented on how Threads collects users’ data, which causes privacy concerns. (Source: X)

For those on Android, the Google Play Store provides a tad more autonomy. Users can adjust settings related to data sharing with Threads.

Eve Maler, CTO of ForgeRock, when approached by Tech Wire Asia concerning Threads’ data privacy issues, highlighted that Threads has reignited discussions about privacy and digital consent. She said “we will see further refinements made to consent guidelines to mitigate ongoing confusion about digital consent. Unfortunately, traditional ‘opt in, opt out’ consent itself is becoming a dark pattern; users of online services know very well that they’re not fully able to choose what happens with their data, and the techniques used to persuade users to say “yes” are increasingly suspect.”

This implies that while consent might empower organizations with more data handling and distribution rights, the complexities of consent guidelines will inevitably face challenges as the app gains traction. Maler believes social media platforms crave data for tailoring user experiences and promoting specific products. Yet, with the rising costs of ensuring genuine consent and growing mistrust among users, more transparent solutions will become imperative.

Why your personal data is Threads’ goldmine

The real question is: why is Threads interested in information like health stats and financial data? Because it’s tied to their primary revenue stream: advertising. In 2022, advertising constituted 97% of Meta’s total revenue.

By gathering data, the app can design intricate user profiles to be shared with third parties for targeted ads. As highlighted by Time, individual data can be highly sensitive. For example, the data collected from someone battling an eating disorder might bring up ads about weight loss. (Meta, however, claims to have updated their privacy policy in 2022, giving users more ad control.)

While Threads is currently ad-free, an insider from Meta told Axios that advertising will be introduced once a significant user base is established.

In reality, the data access and storage level by companies like Threads is unnecessary. Contrast this with platforms like Signal that prioritize encryption to protect user data. Tech firms’ choices regarding data — its collection, utilization, sharing, and retention — aren’t preordained.

How Threads collects users’ data, causing privacy concerns worldwide. (Source – Shutterstock)

Meta’s run-in with Norwegian data protection laws

Meta’s entanglement in privacy controversies isn’t a new phenomenon. A recent report from Reuters highlighted that starting August 14, Meta faces a fine of 1 million crowns (equivalent to US$98,500) daily due to privacy violations. This penalty, announced by Norway’s data protection agency, could ripple throughout Europe.

On July 17, the oversight body, Datatilsynet, warned Meta of impending fines should they neglect to rectify identified privacy breaches.

Datatilsynet emphasized that Meta shouldn’t collect data such as the physical locations of Norwegian users to serve targeted ads—a practice known as behavioural advertising, a staple among many tech giants.

Meta was given a deadline until August 4 to reassure Datatilsynet of their corrective measures. However, by August 7, as revealed by Tobias Judin, Datatilsynet’s international section chief, the company will begin incurring the daily fine.

This penalty is scheduled to continue until November 3. Datatilsynet can make the fine permanent by escalating the decision to the European Data Protection Board. If supported, this could potentially broaden the ruling’s reach throughout Europe. As of now, this action hasn’t been initiated by Datatilsynet.

Recently, Meta announced plans to seek consent from European Union users before targeting ads based on their activity on platforms like Facebook and Instagram.

However, Judin found this measure insufficient. He stressed the immediate cessation of personal data processing until an effective consent system is operational.

Judin commented on Meta’s timeline, “According to Meta, this will take several months, at the very earliest, for them to implement … And we don’t know what the consent mechanism will look like.”

This isn’t an isolated incident. Meta faced a staggering US$1.3 billion fine in the EU in May for privacy infringements. The firm responded by claiming they were unfairly targeted, noting their adherence to the same legal frameworks as numerous other EU companies. In the US, the Federal Trade Commission (FTC) has broadened its 2020 consent order against Meta for purportedly misleading representations regarding developers’ access to user data. X also recently voiced grievances over the FTC’s stringent requirements regarding its data policies.

[embed]https://www.youtube.com/watch?v=YKDHm2bC34M[/embed]

Data privacy is a cornerstone of personal liberty in today’s digital world. For apps like Threads, the gravity of data privacy is magnified due to the nature of the collected information.

Utilizing apps like Threads entails sharing an extensive range of personal details. This can range from basic info such as names and addresses to intricate data about interests, habits, relationships, and even health or financial statuses. Once aggregated and analyzed, such data can paint a detailed picture of a user.

These constructed profiles serve various roles— from customizing ads to influencing pivotal life decisions like financial or medical eligibility. Worst-case scenarios involve data misuse, leading to fraud or identity theft.

Additionally, data privacy embodies personal respect, freedom, and control. Everyone deserves to dictate the accessibility and usage of their data. Losing such control erodes our self-determination.

Is Meta’s safety net failing?

In addition to facing criticism over privacy issues, Meta is under fire for allegedly disregarding reports about unsafe content. The Verge points out that while Meta claims its Trusted Partner program is central to enhancing its policies and safeguarding users, some partners argue otherwise. They suggest that Meta often overlooks this cornerstone initiative, resulting in it being underfunded, lacking manpower, and susceptible to operational hitches.

This criticism is front and center in a recent report by the media non-profit, Internews. The Trusted Partner program ropes in 465 worldwide civil society and human rights organizations. Its goal? To offer these partners a direct line to flag concerning content on Facebook and Instagram — from death threats to calls for violence. Meta has committed to giving priority to these alerts, ensuring swift action.

Yet, Internews’ findings paint a contrasting picture. It suggests that some organizations within the program feel their treatment mirrors that of ordinary users. These organizations often find themselves waiting for feedback, facing neglect, and grappling with lackluster communication. The report emphasizes inconsistent response durations from Meta, with the company sometimes being entirely unresponsive or failing to provide a rationale for its inaction. This alleged oversight is concerning, especially when it pertains to pressing issues, such as grave threats or incitements to harm.

One unnamed partner lamented the delays, saying, “Two months plus. And in our emails we tell them that the situation is urgent, people are dying. The political situation is very sensitive, and it needs to be dealt with very urgently. And then it is months without an answer.”

Proactive steps for enhancing data privacy

It’s crucial for users to recognize how apps like Threads handle their data and to take steps to safeguard their privacy. This entails revisiting privacy settings, being informed about shared information, and opting for apps that value data privacy.

Strategies to bolster privacy on Threads include:

- Making profiles private through the app’s settings.

- Controlling mentions by adjusting settings in the privacy section.

- Muting or blocking disruptive accounts via the privacy settings or directly from individual posts.

- Concealing potentially offensive words by accessing “Hidden Words” in the privacy section.

- Opting to keep ‘like’ data confidential via the “Hide Likes” setting.

Although Threads users might choose to deactivate their profiles, the data might remain indefinitely on Meta’s servers. Current information suggests that a full data erasure option for Threads isn’t available.

In this digital era, data privacy is paramount. Platforms like Threads, Instagram, Facebook, Twitter, and LinkedIn handle immense volumes of personal data. Users must stay vigilant about how this data is managed and safeguarded. Data privacy isn’t merely about security but human rights, personal freedoms, and dignity.

Social media platforms handle immense volumes of personal data – prioritizing privacy of users. (Source – Shutterstock)

Being conscious of data privacy empowers users to make enlightened choices about data sharing.

A well-informed user can employ strategies to defend their privacy, such as tweaking settings, curbing data disclosure, and selecting privacy-conscious services. Such heightened awareness can also kindle a demand for increased openness and responsibility from data-handling companies, fostering improved industry-wide privacy norms.

0 Comments