The goal of PPC ad testing is to increase your click-through rate (CTR) and/or your conversion rate (CVR). Focusing on CTR should result in more traffic to your site, but it could be at the expense of your CVR. On the other hand, focusing on conversion rate should improve the quality of your traffic, but could hurt your CTR and reduce the number of potential customers visiting your site.

As advertisers we are asked to maximize both CTR and CVR, which often requires totally different approaches. If you want to maximize the total number of conversions on your site, you will need to look at a different metric which considers both the CTR and the CVR. This metric is called Impression-to-Conversion or I2C (Conversions/Impressions).

I recently created a free Excel download for determining statistically significant PPC ad tests. In the spreadsheet, you will notice that I have included the calculation I2C for those of you who need to maximize total conversions in your ad testing efforts.

Using I2C is a great way to combine the goals of ad testing:

- Improving CTR and Quality Score (QS)

- Improving conversion rate

Another way to look at these goals is with the question behind the test:

- Which ad variation generates the most traffic to my site (CTR)?

- Which ad variation generates the most qualified traffic to my site (CVR)?

Here is a smarter question to ask of your ad test:

- Which ad variation generates the most conversions on my site (CTR & CVR)?

Note that more conversions doesn’t necessarily equal more profit, just as more clicks doesn’t always equal more conversions. You will have to pay attention to costs and revenue as always.

Examples of I2C for Ad Testing

Example 1:

As you can see from the results, CVR’s for both ads are very similar and haven’t reached statistical significance yet. It would take about 17K clicks to reach statistical significance for CVR. I don’t want to wait for 17K clicks to make a decision (and leave money on the table), but I do want to consider CVR before I pick a winner. This is where I2C helps.

The results above show a statistically significant I2C for the challenger ad. The reason you get 106% lift is because of the much better CTR for the challenger, but by using I2C we are making a decision based on both the CTR and the CVR.

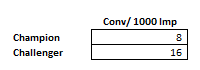

A great way to report I2C is with conversions per 1000 impressions. For the above example it looks like this:

This view of the data really gets the point across.

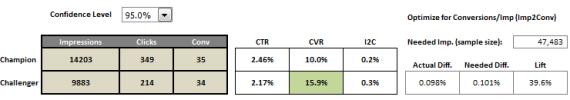

Example 2:

In this next example the CTR is similar and the CVR is showing statistical significance. Again if we look to I2C we can make our decision based on both metrics and achieve a 40% lift with 90% confidence. In order to reach a higher confidence level we would have to let the test run longer.

Using I2C allows for consistent decision making and not having to bounce back and forth between using CTR and CVR.

Example 3:

In this last example, we have a statistically significant loser for CTR and winner for CVR. In this situation we should let the test run until we get to the needed 25K impressions and hope that we can maintain our 22% lift for I2C.

I wanted to note that the results like you see above come from the champion ad aligning more closely with the user intent, while the challenger ad aligns more closely with our service. In short, the ad is qualifying the lead. We don’t want you to click if you aren’t a good fit for the service we provide, but we still want to stretch our reach into less targeted markets.

Final Thoughts

While I2C may not be the best metric for improving Quality Score, it should align more closely with most business goals (conversions or sales). If possible, you should look at the overall profitability of your text ads, but depending on your goals, I2C can cut through the confusion around which ad testing metric (CTR or CVR) to use in your testing efforts.

Here is another free Excel download for testing statistical significance using Google math for text ad split testing, but it won’t estimate needed sample size.

Chad Summerhill is the author of the blog PPC Prospector.

0 Comments