I am not particularly excitable, and I am by no means sentimental.

I’ve been known to remain even keel amidst, say, a surging comeback from a 28-3 deficit. I can throw out childhood photographs like they were table scraps without batting an eyelash. Commercials depicting the unbreakable bond between man and Clydesdale do not incite even a single tear…

It would appear I am in the minority.

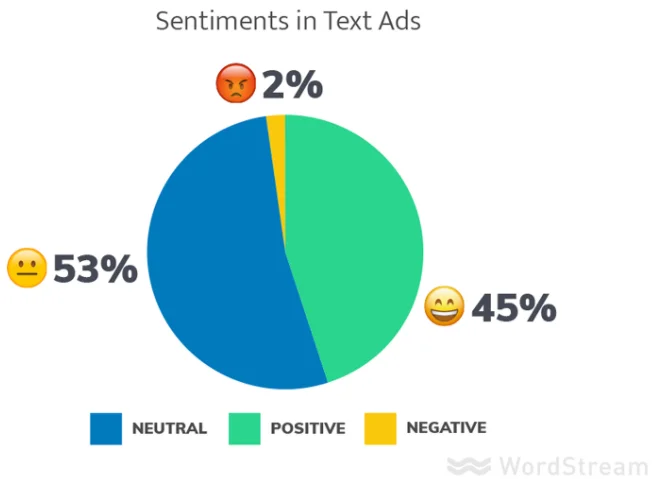

When we analyzed 612 top-performing AdWords text ads, we found that ad copy that appeals to positive human emotions appears in 45% of out-of-this-world ads.

Something to smile about

But guess what? Looking at data in aggregate can be misleading. Since running this study, we’ve tested sentiment in some of our own ads, and were pretty surprised by the results.

Today, we’re going to dive into exactly what “sentiment” can look like in AdWords creative—text or display—and look at two examples of positive-vs-negative ad creative tests from WordStream’s very own account (along with the results).

We’ll share insights into what worked and what didn’t during our sentiment-focused split testing, offering tips you can use to inform your own heart-tugging creative along the way.

But first…

What does sentiment look like in AdWords?

“Sentiment” as I’m using it here refers to an attempt to play on emotional triggers through ad creative.

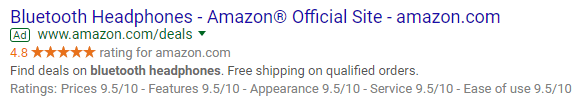

Most text ads you see atop the SERP live in “neutral” territory. When I search for Bluetooth headphones, I’m served the following ad:

To the point? Sure. Loaded with pertinent information that may very well drive my decision to purchase? You betcha. Sentimental? Hell no.

It’s important to note that being devoid of sentiment or emotion doesn’t make it a bad ad. Remember, half of all top-performing ads have neutral sentiment.

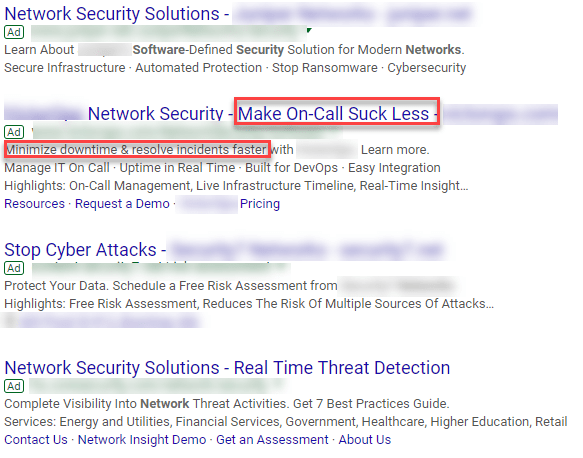

Other searches, however, yield ads that address a prospect’s pain points and a smattering of sentiment. Take this SERP for “network security software,” for instance (brand-specific copy has been blurred):

The ads in positions 1,3, and 4 make solid use of the search query and speak to the benefits of their individual solutions. There is nothing wrong with this approach. At. All.

But take a look at the ad in position 2. Notice anything different (outside of the big red squares, I mean)?

The language in the second headline represents what I’d call a ZAG. Where the other text ads are a bit, well, bland, the ad in position 2 uses colloquial language (“this SUCKS mom, I don’t wanna clean my room!”) to make an emotion appeal. It uses negative sentiment to say “we get it.”

On the Display Network and in Gmail ads, it can be much easier to convey sentiment because you can use image creative to do some of the heavy lifting. Case in point…

^most definitely an example of positive sentiment, right? I jest.

This advertiser saw a 47% increase in CTR when implementing creative that leveraged negative sentiment versus previous ads with positive imagery. (This proves that while only 2% of top-performing text ads in our study were emotionally negative, there will certainly be instances in which that isn’t the case.)

In the ad copy analysis I referenced at the beginning of this post, we used the Vader sentiment analysis tool in concert with Python’s NLTK library to determine the sentiment (positive, negative, or neutral) evident in our data set. You don’t need a fancy laser sword to implement sentiment in your own ads. You just need a bit of intuition.

Consider your vertical, target demographic, and the way your competitors talk about themselves. Use that information to formulate potential positive and negative ad creative, and begin testing. If this is your first go-around, you’ll want to make sure that you conduct this sort of A/B testing in a measured fashion.

“Allen, what does that mean?!”

Free guide >> The 120 Best Words & Phrases for Marketing with Emotion

A/B testing for dummies

Because AdWords is a fickle beast, historical performance plays a massive role in present and future performance. As such, simply pausing your existing creative and replacing it with a brand new positive ad and an equally new negative ad will result in a bad time.

Instead, test one sentiment-based variant against your existing ad copy for at least two weeks.

When I say “test against,” I don’t mean “maintain your existing ad rotation, insert new ad copy, and check on your account in a fortnight.” You’re a smart advertiser; if you’ve found high-performing ad creative and aren’t currently split-testing, you’ve probably got your ad rotation set to “Optimize for conversions.”

This is not inherently bad, it just means that, by testing brand new POSITIVE ad copy against your top performing, probably neutral ad, you’re hamstringing the former. You told Google to optimize for conversions and, historically, the ad that converts is the one that already existed. Google, such a good listener!

Instead, before you push a sentiment-centric creative test live, be sure to adjust your ad rotation (which lives in the setting tab at the campaign level) to “rotate evenly,” as shown below:

From here, all you need to do is mark your ad variants with “Positive” and “Negative” labels; this will make reviewing data in the Dimensions tab possible, allowing you to view performance in aggregate (more advanced users can leverage the Experiments feature in AdWords to run complex A/B tests, but for most, simply ensuring even ad rotation and using labels is more than enough).

Now you know:

- What sentiment is

- How it can impact ad performance

- How to rotate ad copy like a savvy veteran

I’d say you’re ready to dig into some examples!

Example #1: Testing Sentiment in Search Ad Creative

At WordStream we have a pretty valuable tool called the AdWords Performance Grader. You may have heard of it. In fact, a lot of people have.

This results in some significant search volume for the keyword “AdWords performance grader.” Why is this particularly valuable? Well, aside from it being the moniker of a tool we own (ostensibly making it a branded term), it also conveys some great search intent.

Think about it.

Why would someone search for an AdWords performance grader? Probably because they think their AdWords account could perform more efficiently, right? This is a scenario that begs for ad copy that speaks to sentiment.

For the longest time, we implemented negative ad creative, like so:

It’s not as though the ad calls prospects dolts by any means, but there’s an unsubtle implication that the searcher has been doing something wrong: they’ve been “wasting money in AdWords.” Once our data said that top performing ads tended to lean more towards positivity, though, we thought it high time to test our top performer against something more uplifting.

(Note that, since the ad conveying negative sentiment was the incumbent, we didn’t test it against a neutral ad.)

Between July 7 and July 31, we ran the “Stop Wasting Money in AdWords” ad against this beauty:

As you can see, this variant exudes positivity from every syllable. Instead of telling prospects to stop wasting, they are encouraged to get the most out of. These phrases are ostensibly conveying the same idea framed with differing sentiments.

Which do you think performed better during that three(ish) week period?

The negative ad had a Conversion Rate 18.8% higher than the positive ad. This is a pretty ridiculous difference, but it’s nothing compared to what we saw in terms of click through rate: the negative ad’s CTR was 67.29% better than the positive ad’s CTR.

What does this tell us?

Well, when it comes to their ad spend, people just aren’t into positivity.

Example #2: Testing Sentiment in Display Ad Creative (Gmail)

A/B testing with Display creative can be tricky because there are so many gosh darn variables. Concept, color, and copy can all have significant impact on performance. Adding a healthy dose of sentiment to the mix can make thigs reallllly interesting.

To discover the potential impact of positive and negative sentiment in image ad creative, we decided to run two comparable creative concepts using Gmail ads – on the positive side, a smiling swine being filled with coinage; on the negative side, a broken boar whose cash innards are fluttering into the ether. We ran this test in two exclusive audiences during the entire month of June.

Here’s the creative, for reference:

As you can see, the copy here is quite close to what we used in the text ad sentiment test. The results, however, could not have been more disparate. The positive ad drove an astounding 50% more conversions than the negative ad.

What does this tell us? Well, that people generally dislike dead cartoon pigs! Duh.

On a more serious note, these results speak to the fact that image creative can play a pivotal role in establishing sentiment and, thus, driving results.

(Note: We realize that there are a lot of differences between the two ads; sometimes changing the button copy alone is enough to alter the results of a test. If you want to be absolutely sure that you know what’s driving the improvement in performance between tests, only change one variable at a time.)

The Upshot

As you can see from these tests, when it comes to marketing with emotion there’s no hard and fast rule that says positive sentiment always works better in text ads or negative sentiment always works better in display. It depends on your industry, your target audience, the offer in question and countless other variables. So you should always test rather than assume.

The key takeaway is that changing the sentiment in your ads, whether it’s from positive to negative or vice versa, or from neutral to a more pronounced emotion, can make a big difference in your CTR and conversion rates. So why not test and find out what your would-be customers respond to? (And let us know what you find!)

0 Comments