Everybody* knows that A/B testing best practices can help you run faster, jump higher and increase conversions. When it comes to providing users with an engaging and rewarding online experience, good A/B tests are a more effective treatment for embarrassing landing pages than topical ointment. However, approaches to designing multivariate tests that provide accurate and representative results can be uncertain at best and outright divisive at worst.

A/B testing is an invaluable tool for landing page optimization when implemented correctly. To minimize wasting time, money and effort on changes that will yield little to no benefit – or even make things worse – take the following points into consideration during your next project.

* Not everybody knows this

RELATED: 5 Low-Budget A/B Testing Tips

Prove It

Before you casually ask your designers and copywriters to create dozens of different buttons or calls to action by the end of the day, it’s crucial that you have a hypothesis you wish to test. After all, without at least some idea of the possible outcomes, A/B testing becomes A/B guessing. Similarly, without a hypothesis, discerning the true impact of design changes can be difficult and may lead to additional (and potentially unnecessary) testing, or missed opportunities that could have been identified had the test been performed with a specific objective in mind.

Just as scientists approach an experiment with a hypothesis, you should enter the multivariate testing phase with a clear idea of what you expect to see – or at the very least, some notion of what you think will happen.

Formulating a hypothesis doesn’t have to be complicated. You could A/B test whether subtle changes to the phrasing of a call to action results in more conversions, or whether a slightly different color palette reduces your bounce rate or improves your dwell time.

Whatever aspect of your site you decide to test, be sure that everyone involved in the project is aware of the core hypothesis long before any code, copy or assets are changed.

Key Takeaway: Before you begin your A/B test, know what you’re testing and why. Are you evaluating the impact of subtle changes to the copy of a call to action? Form length? Keyword placement? Make sure you have an idea of what effect changes to the variation will have before you start A/B split testing.

RELATED: If you’re looking for ways to increase conversions through calls to action, check out these call to action examples and why they’re so effective.

Take a Granular Approach to A/B Testing

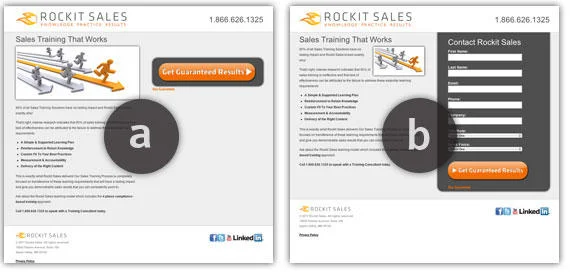

One of the most common mistakes people make when performing A/B tests is comparing the results of landing page layouts that are too radically different from one another. Although it might be tempting to test the effectiveness of two completely different pages, doing so may not yield any actionable data. This is because the greater the differences between two versions of a page, the harder it is to determine which factors caused an improvement – or decline – in conversions.

Don’t be seduced by the idea that all variations in an A/B test have to be spectacular, show-stopping transformations. Even subtle changes can have a demonstrable effect, such as slightly reformatting a list of product features to persuade users to request more information, or phrasing a call to action differently to drive user engagement.

Even something as “harmless” as minor differences in punctuation can have a measurable impact on user behavior. Perry Marshall, marketing expert and author of “The Ultimate Guide to Google AdWords,” recalled an A/B test in which the CTR of two ads were evaluated. The only difference between the two? The inclusion of a single comma. Despite this seemingly irrelevant detail, the variant that featured the comma had a CTR of 4.40% – an improvement of .28 percentage points over the control.

However, that’s not to say that comparing user behavior on two very different versions of a page is completely without merit. In fact, doing so earlier in the testing phase can inform design decisions further down the pipeline. A/B testing best practices dictate that the greater the difference between two versions of a page, the earlier in the testing process these variations should be evaluated.

Key Takeaway: Test one element at a time so you’ll know for sure what change was responsible for the uptick in conversions. Once you’ve determined a winner, test another single change. Keep iterating until your conversion rate is maxed out.

Test Early, Test Often

Scientists rarely use the results of a single experiment to prove or disprove their hypotheses, and neither should you. To adhere to A/B testing best practices, you should evaluate the impact of one variable per test, but that doesn’t mean you’re restricted to performing just one test overall. That would be silly.

A/B testing should be a granular process. Although the results of the first test may not provide you with any real insight into how your users behave, they might allow you to design additional tests to gain greater understanding about what design choices have a measurable impact on conversions.

The sooner you begin A/B testing, the sooner you can eliminate ineffective design choices or business decisions based on assumptions. The more frequently you test certain aspects of your site, the more reliable the data will be, enabling you to focus on what really matters – the user.

Key Takeaway: Don’t put off A/B testing until the last minute. The sooner you get your hands on actual data, the sooner you can begin to incorporate changes based on what your users actually do, not what you think they’ll do. Test frequently to make sure that adjustments to your landing pages are improving conversions. When you’re building a landing page from scratch, keep the results of early tests in mind.

Be Patient With Multivariate Tests

A/B testing is an important tool in the marketing professional’s arsenal, but meaningful results probably won’t materialize overnight. When designing and performing A/B tests, be patient – ending a test prematurely might feel like saving time, but it could end up costing you money.

Economists and data scientists rely on a principle known as statistical significance to identify and interpret the patterns behind the numbers. Statistical significance lies at the very heart of A/B testing best practice, as without it, you run the risk of making business decisions based on bad data.

Statistical significance is the probability that an effect observed during an experiment or test is caused by changes made to a specific variable, as opposed to mere chance. To arrive at statistically significant results, marketers must have a sufficiently large data set to draw upon. Not only do larger volumes of data provide more accurate results, they also make it easier to identify standard deviations – typical variations from the average result that are not statistically significant. Unfortunately, it takes time to gather this data, even for sites with millions of unique monthly visitors.

If you’re tempted to cut a test short, step back for a moment. Take a deep breath. Grab a coffee. Do some yoga. Remember – patience is a virtue.

Key Takeaway: Resist the temptation to end a test early, even if you’re getting strong initial results. Let the test run its course, and give your users a chance to show you how they’re interacting with your landing pages, even when multivariate testing large user bases or high-traffic pages.

Keep an Open Mind When A/B Testing

Remember how we emphasized the importance of forming a hypothesis before starting the testing phase? Well, just because you have an idea of the outcome of an A/B test doesn’t mean it’s going to happen – or that your original idea was even accurate. That’s OK, though, we won’t make fun of you.

Many a savvy marketer has fallen prey to the idea that, regardless of what her results tell her, the original hypothesis was the only possible outcome. This insidious thought often surfaces when user data paints a very different picture than the one that project stakeholders were expecting. When presented with data that differs significantly from the original hypothesis, it can be tempting to dismiss the results or the methodologies of the test in favor of conventional knowledge or even previous experience. This mindset can spell certain doom for a project. After all, if you’re so confident in your assumptions, then why A/B test in the first place?

Chris Kostecki, a seasoned marketing and PPC professional, can certainly attest to the importance of keeping an open mind when A/B testing. While evaluating two versions of a landing page, Chris discovered that the variant – which featured more positional copy and was further away from the product ordering page – outperformed the control by a substantial margin. Chris noted that although he was confident that the more streamlined page would result in more conversions, his A/B test results proved otherwise.

Remaining open to new ideas based on actual data and proven user behavior is essential to the success of a project. In addition, the longer the testing phase, and the more granular your approach, the more likely you are to discover new things about your customers and how they interact with your landing pages. This can lead to valuable insight into which changes will have the greatest impact on conversions. Let your results do the talking, and listen closely to what they tell you.

Key Takeaway: Users can be fickle, and trying to predict their behavior is risky. You’re not psychic, even if you do secretly have a deck of tarot cards at home. Use hard A/B test data to inform business decisions – no matter how much it surprises you. If you’re not convinced by the results of a test, run it again and compare the data.

Maintain Momentum

So, you’ve formulated your hypothesis, designed a series of rigorous tests, waited patiently for the precious data to trickle in, and carefully analyzed your results to arrive at a statistically significant, demonstrable conclusion – you’re done now, right? Wrong.

Successful A/B tests can not only help you increase conversions or improve user engagement, they can also form the basis of future tests. There’s no such thing as the perfect landing page, and things can always be improved. Even if everybody is satisfied with the results of an A/B test and the subsequent changes, the chances are pretty good that other landing pages can yield similarly actionable results. Depending on the nature of your site, you can either base future tests on the results of the first project, or apply A/B testing best practices to an entirely new set of business objectives.

Key Takeaway: Even highly optimized landing pages can be improved. Don’t rest on your laurels, even after an exhaustive series of tests. If everyone is happy with the results of the test for a specific page, choose another page to begin testing. Learn from your experiences during your initial tests to create more specific hypotheses, design more effective tests and zero in on areas of your other landing pages that could yield greater conversions.

Choose Your Own Adventure

No two scientific experiments are exactly alike, and this principle most definitely applies to A/B testing. Even if you’re only evaluating the impact of a single variable, there are dozens – if not hundreds – of external factors that will shape the process, influence your results and possibly cause you to start sobbing uncontrollably.

Take Brad Geddes, for example. Founder of PPC training platform Certified Knowledge, Brad recalled working with a client that had some seriously embarrassing landing pages. After much pleading and gnashing of teeth, Brad finally managed to convince his client to make some adjustments. The redesign was almost as bad as the original, but after being A/B tested, the new landing page resulted in an overall sitewide increase in profit of 76 % – not too shabby for a terrible landing page.

Don’t approach the testing phase too rigidly. Be specific when designing your tests, remain flexible when interpreting your data, and remember that tests don’t have to be perfect to provide valuable insights. Keep these points in mind, and soon, you’ll be a seasoned A/B testing pro – and no, you don’t have to wear a lab coat (but you can if you want to, it’s cool).

Key Takeaway: Every multivariate test is different, and you should remember this when approaching each and every landing page. Strategies that worked well in a previous test might not perform as effectively in another, even when adjusting similar elements. Even if two landing pages are similar, don’t make the mistake of assuming that the results of a previous test will apply to another page. Always rely on hard data, and don’t lose sleep over imperfect tests.

0 Comments